We performed three interventions: changing the temperature of the model, shuffling the order of the questions, and changing the wording of the question. We found that models differ in how sensitive they are to such changes, with GPT 3.5 Turbo being the most stable and Llama 3.3 the least:

Surprisingly, on average the models are most sensitive to changes in question wording, but show little variability with increasing temperature:

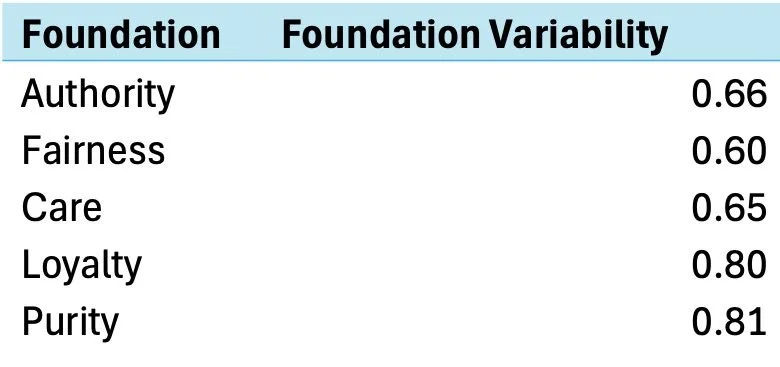

We also found that different moral foundations are more variable than others. For example, Fairness is quite stable while Purity shows more variability: